“Defense in depth” gets used constantly in cloud security conversations. It shows up in vendor decks, MSP proposals, and compliance documentation. Most organizations can say the words. Far fewer can draw the model. That gap, between the phrase and the practice, is where breaches happen.

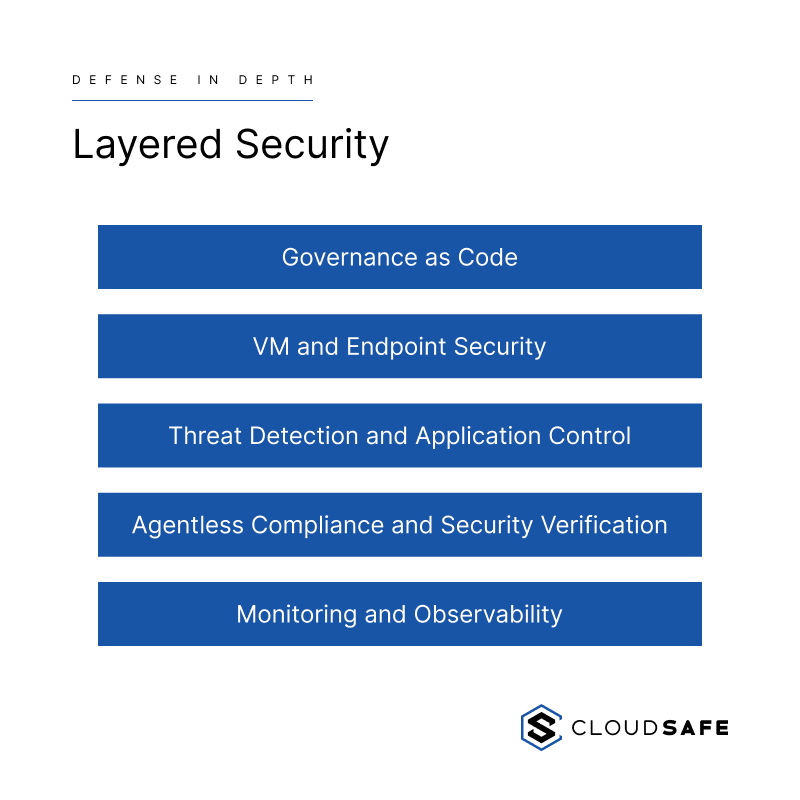

A real layered security model isn’t a collection of tools pointed at the same environment. It’s an architecture where each layer has a defined role, integrates with the layers around it, and provides a form of verification that the others can’t. In a modern cloud environment, that architecture has five distinct layers. Here’s what each one does, why it matters, and how they connect.

Layer 1: The Foundation, Governance as Code

The foundation layer isn’t a tool. It’s a policy baseline, defined as code at the top level and applied consistently downward across every account, subscription, and service in the environment. In Azure environments, that means Azure Policy. In AWS, it means AWS Config and Service Control Policies (SCPs). The specific mechanism matters less than the discipline it represents: deciding what “correct” looks like before anything else gets built, and enforcing that definition automatically.

When the foundation is in place, every resource that gets provisioned is evaluated against a known standard from the moment it exists. When a configuration drifts, the environment knows immediately. When something gets changed that shouldn’t have been, there’s a record and a response.

When the foundation isn’t in place, every other security investment is compensating for drift. Organizations without a governance layer spend their time reacting to misconfigurations rather than preventing them. The tools above this layer can catch problems, but they can’t fix the fact that there’s no defined standard to measure against. Getting this layer right is what makes everything else meaningful.

Layer 2: VM and Endpoint Security

Virtual machine security in a cloud environment carries different risks than traditional on-premises endpoint protection. Workloads spin up and down dynamically, environments scale in response to demand, and the attack surface shifts constantly. A security approach designed for static infrastructure doesn’t map cleanly onto this reality.

Endpoint security platforms like SentinelOne provide continuous VM-level protection that accounts for how cloud environments actually behave, covering the endpoint layer regardless of how the environment changes underneath it. This layer watches what’s happening inside the environment in real time, detecting threats at the workload level before they can move laterally or establish persistence.

This layer and the foundation layer serve different functions. Policy baselines define what the environment should look like. Endpoint security watches what’s happening inside it. Both are necessary. Neither substitutes for the other. An environment with strong governance but no endpoint protection has a defined standard and no visibility into whether threats are operating within it.

Layer 3: Threat Detection and Application Control

Threat detection platforms like Huntress and application control tools like ThreatLocker serve distinct but complementary functions at this layer. Threat detection focuses on finding threats that have already made it into the environment: persistent footholds, compromised credentials, and attacker behavior that blends into normal activity. Application control focuses on preventing unauthorized applications from executing in the first place. Together, they close the gap between detection and prevention that neither category covers alone.

This is where many mid-market organizations have the most significant blind spots. Endpoint protection catches known threats. Threat detection and application control handle the space between “known bad” and “unknown,” the category that includes most of the attacks that actually succeed. For environments handling sensitive data or operating under compliance requirements, this layer addresses a category of risk that no other layer in the model is positioned to cover.

Layer 4: Agentless Compliance and Security Verification

Agentless cloud security platforms like Orca Security operate as a second verification layer on top of the native policy enforcement already in place at the foundation. The agentless approach means it evaluates the entire environment continuously, without the operational overhead of deploying and managing agents across every workload. Every account and subscription is measured against the defined policy baseline at all times. Any configuration that drifts out of compliance triggers an immediate alert, giving the team two independent confirmation paths rather than one.

The value here isn’t redundancy for its own sake. It’s the acknowledgment that no environment is perfectly managed at all times. Configurations get changed, exceptions get made, and things get missed. Even well-run environments experience configuration drift. Having an independent layer that continuously re-evaluates the environment against policy means that when something slips, it gets caught and corrected before it becomes a vulnerability.

The combination of native policy enforcement plus agentless verification creates a level of confidence that either approach alone cannot provide. It also creates a meaningful separation between the team responsible for managing the environment and the system responsible for verifying it, which matters both operationally and from a compliance audit perspective.

Layer 5: Monitoring and Observability

Infrastructure monitoring platforms like LogicMonitor provide visibility across the full environment, private cloud, public cloud, and everything in between. This layer serves a different function than the security tools above it. Where endpoint security platforms like SentinelOne, threat detection tools like Huntress, and application control platforms like ThreatLocker are focused on threats and behavior, and agentless security platforms like Orca are focused on compliance posture, infrastructure monitoring is focused on the health, performance, and operational state of the infrastructure itself.

That distinction matters because anomalies in performance are frequently early indicators of security events. Unusual resource consumption, unexpected network traffic patterns, and infrastructure behavior that falls outside normal baselines can all signal that something is wrong before a security tool catches it explicitly. Without this layer, those signals go unnoticed.

Monitoring is also what ties the model together operationally. Each of the layers below it operates with a degree of independence. Observability gives the team a unified view of the environment, ensuring that nothing operates in silence and that the right people are alerted when something changes, whether that change is a security event, a configuration drift, or an infrastructure anomaly that warrants investigation.

Why Integration Is the Point

The tools in this model aren’t valuable in isolation. What makes the model work is the way each layer connects to and verifies against the others. Governance defines the baseline. Endpoint security watches behavior inside it. Threat detection and application control close the gaps between known and unknown. Agentless verification confirms the baseline is holding. Monitoring ensures the whole environment is visible and nothing operates without oversight. Remove any one of those connections and the model has a gap.

That integration also extends across environments. The same toolchain that covers a private cloud environment carries over into public cloud, creating consistent security coverage regardless of where workloads live. For organizations running hybrid environments that span on-premises infrastructure and Azure or AWS, that consistency is critical. A security model that works in one environment but not another isn’t a layered model. It’s a partial solution with blind spots built in.

What This Means for Mid-Market Organizations

Most mid-market organizations don’t have the internal resources to design, build, and maintain a security model at this level. That’s not a criticism. It’s the reality of operating with a lean IT team while managing complex infrastructure and compliance obligations across manufacturing, financial services, healthcare, or insurance environments where the cost of getting it wrong is significant.

The question isn’t whether to build it internally. It’s whether the MSP managing your environment has actually built it, or whether they’re offering a loosely connected collection of tools and calling it a layered model. That distinction is more common than it should be. Many providers who manage cloud environments lack a clear answer when asked to explain their security architecture in specific terms.

The difference is visible if you know what to ask. A provider with a real defense-in-depth model can explain every layer, what it does, how it integrates with the layers around it, and what happens operationally when something triggers an alert. A provider without one will speak in generalities. For organizations evaluating MSPs, or reassessing the one they currently have, that conversation is one of the most important due diligence steps they can take.

A Model Built Around Control

A well-built defense-in-depth model isn’t about having the most tools. It’s about having a clear, enforceable idea of what your environment is supposed to look like, and a set of integrated layers that continuously verify it, detect deviations, and respond before those deviations become incidents. That level of control is what separates organizations that manage security proactively from those that discover problems after the fact.

For mid-market organizations evaluating their current security posture or assessing a potential managed services partner, the starting point is understanding what’s actually in place. CloudSAFE offers security assessments designed to map your current environment against a real layered model and identify where the gaps are. Schedule a consultation to get a clear picture of where you stand.

Frequently Asked Questions

Q: What is the difference between having cloud security tools and having a defense-in-depth model?

A: Having tools means you have individual capabilities pointed at your environment. Having a defense-in-depth model means those tools are integrated into a deliberate architecture where each layer has a defined role, verifies against the others, and operates against a consistent policy baseline.

Q: How do I know if my current MSP has actually built a layered security model?

A: Ask them to walk through each layer of their security architecture specifically, what tools they use, what each tool does, and what happens operationally when something triggers an alert. A provider with a real model can answer those questions in detail. Vague or generalized answers are a signal that the model isn’t there.

Q: Why does governance as code need to come before anything else in the security stack?

A: Because every layer above it depends on having a defined standard to measure against. Without a policy baseline, security tools are detecting and responding to problems with no clear definition of what “correct” looks like. Governance as code establishes that definition and enforces it automatically from the moment a resource is provisioned.

Q: What does agentless security verification mean and why does it matter?

A: Agentless verification means the tool evaluates your environment continuously without requiring software agents deployed on every workload. It matters because it removes operational overhead, eliminates agent coverage gaps, and provides an independent second confirmation layer on top of your native policy enforcement.

Q: How does a defense-in-depth model support compliance requirements like SOC 2, HIPAA, or PCI DSS?

A: A properly built layered model creates continuous, documented evidence that your environment is operating within defined policy boundaries. The governance layer enforces controls automatically, the agentless verification layer confirms they are holding, and the monitoring layer provides the audit trail. Together, they shift compliance from a periodic manual exercise to an ongoing automated state.