Most organizations test their disaster recovery plans once a year, if at all. But your infrastructure changes constantly, and each change introduces risk you haven’t validated. The better approach: tie testing frequency to your rate of change, use tiered testing methods that don’t shut down operations, and treat Q1 as your opportunity to validate recovery before the year’s big projects roll out.

69% of organizations believed they were prepared for a ransomware attack. After getting hit, that confidence dropped by more than 20%. The difference between those two numbers? Untested assumptions.

According to Veeam’s 2025 Ransomware Trends Report, most organizations feel ready until reality tests them. And by then, the gaps in their disaster recovery plans have already cost them time, data, and money.

If your DR testing cadence is “once a year” or “whenever we get around to it,” you’re not alone. But you’re also not as protected as you think.

The DR Testing Reality

The confidence gap is real. Veeam’s research surveyed 1,300 organizations, and the pattern was consistent: teams believed their recovery plans were solid right up until they needed them. Post-attack, that confidence cratered.

The numbers behind this aren’t surprising once you see them. Only 50% of businesses test their DR plan annually. Another 7% don’t test at all. And only 44% of organizations include backup verifications and frequencies in their ransomware response playbook.

Having a plan isn’t the same as having a working plan. Documentation gives you a starting point. Testing tells you whether that starting point actually leads somewhere. Without regular validation, your DR plan is a theory, not a capability.

Why Annual Testing Fails

Your infrastructure doesn’t follow a calendar. Code deployments, hardware upgrades, storage migrations, staff changes. These happen throughout the year, not on a convenient annual schedule. Each one can quietly break your recovery process without anyone noticing.

The rate of change is the real driver of testing frequency. Thinking about DR testing as a yearly event misses the point entirely. The question isn’t “when did we last test?” It’s “how much has changed since we last tested?”

Untested changes stack up. A new server here, a storage policy tweak there, a couple of staff transitions. None of them seem significant on their own. But six months of small changes can add up to a recovery plan that no longer reflects reality. And you won’t know until you need it to work.

When to Test: A Change-Based Framework

Tie testing to change, not the calendar. Any significant change in code, hardware, physical location, or storage medium should ideally trigger a test. The goal is validating that your recovery process still works after something moves.

Not every change requires a full failover. Build a review process that assesses change type and risk to recovery success. A major storage migration warrants more scrutiny than a routine patch. Match the test scope to the change scope.

For fast-moving environments where testing every change isn’t practical, establish a minimum baseline. Quarterly validation for critical systems, with targeted tests after high-risk changes, keeps you from drifting too far from a working recovery state.

Changes that should trigger a DR review or test:

- Infrastructure changes: new servers, storage systems, network configurations

- Application changes: major deployments, version upgrades, new integrations

- Location changes: data center moves, cloud migrations, new replication targets

- Personnel changes: new staff in recovery roles, departures of key team members

- Vendor changes: new backup software, different DR providers, updated SLAs

Testing Doesn’t Mean Shutting Down

The misconception that keeps organizations from testing more often: DR testing is a massive production event. Many teams avoid it because they picture a full-scale tabletop exercise that disrupts operations and requires all hands on deck. That’s one type of test. It’s not the only type.

Testing can be divided into focused areas based on recent changes. Validate a runbook. Walk through a checklist. Train a new team member on recovery procedures. These smaller efforts count, and they catch problems before a full failover ever happens.

Business doesn’t have to stop for you to validate your recovery capability. Targeted recovery tests, documentation reviews, and staff training can happen alongside normal operations. The goal is continuous validation, not a single annual disruption.

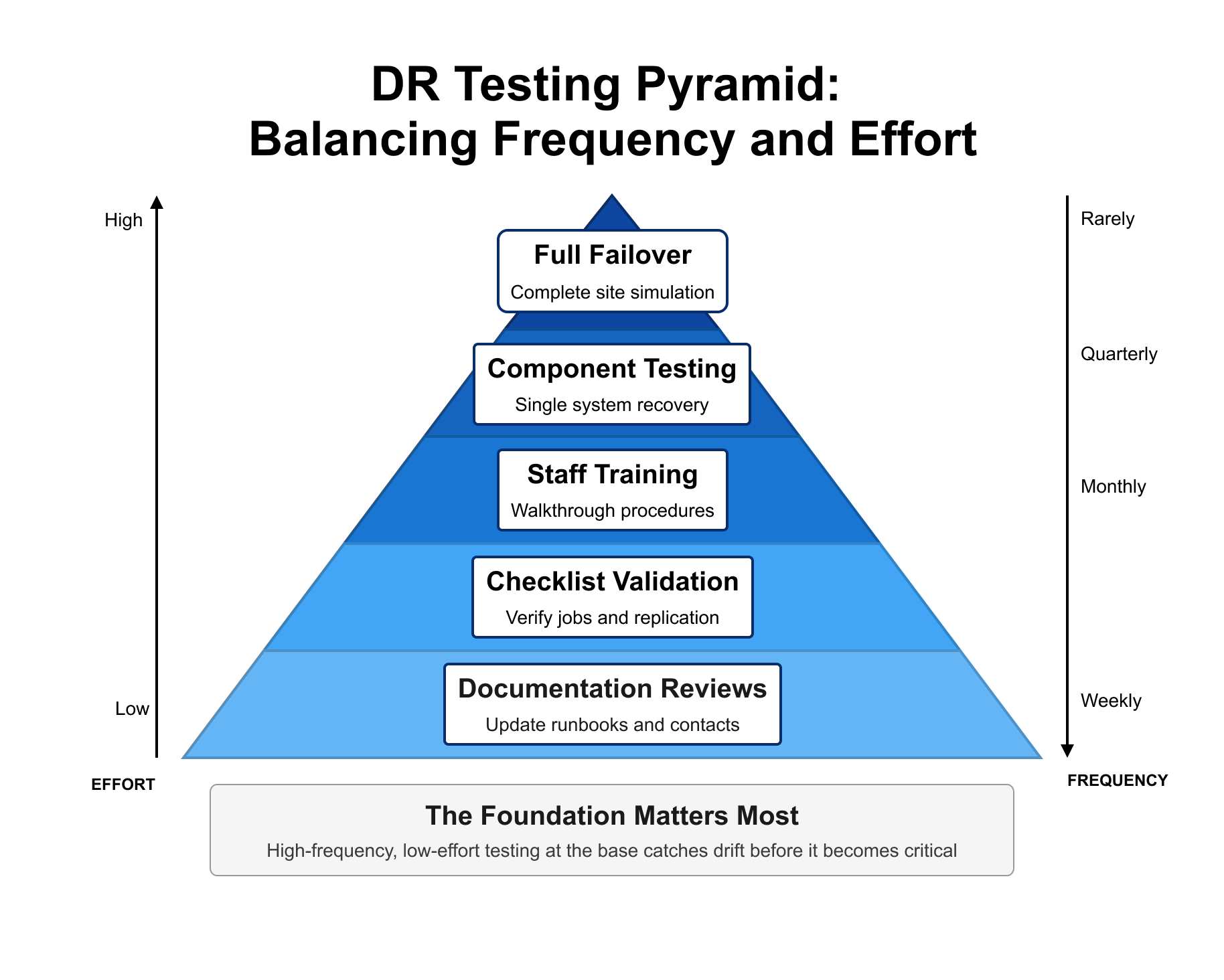

A tiered approach to DR testing:

- Documentation reviews: Verify runbooks, contact lists, and procedures are current. Low effort, high value for catching drift.

- Checklist validation: Confirm backup jobs are running, replication is healthy, recovery targets are accessible.

- Staff training: Walk new team members through their recovery responsibilities. Their questions often reveal gaps.

- Component testing: Recover a single system or application to validate the process works.

- Full failover: Simulate complete site loss and execute recovery end-to-end. The gold standard, but not the only standard.

The Consequences of Skipping

The stakes have changed. Veeam’s previous Ransomware Trends Report (2024) found that 96% of ransomware attacks targeted backup repositories. Attackers know that backups are your escape route, so they go after them first.

Even organizations that maintain backups face risk during recovery. 63% of organizations risk reintroducing infections during restoration because they skip critical validation steps. Pressure to restore operations quickly leads teams to cut corners, and those shortcuts can mean recovering the same malware that caused the outage.

The consequences of an untested plan are measured in weeks, not hours. When recovery fails, organizations face extended downtime, data loss, and in some cases, complete inability to recover. Ransomware scenarios are the most severe, but hardware failures, natural disasters, and human error can all expose the same gaps.

Building a Program That Stays Current

A mature DR testing program isn’t defined by a single annual exercise. It’s defined by continuous validation tied to how your environment actually operates.

Markers of a well-tested DR program:

- RTO/RPO trend tracking: You measure recovery times and data loss windows with each test, and those numbers improve over time.

- Documented runbooks that evolve: Your recovery procedures get updated after every test based on what worked and what didn’t.

- Post-test debriefs: Every test ends with a review that identifies gaps and assigns action items.

- Change-triggered testing: Your team has a process for evaluating whether infrastructure changes require DR validation.

- Staff readiness: New team members get trained on recovery procedures, and that training is part of onboarding.

The Q1 Opportunity

The new year is an ideal window for DR testing. Most organizations locked down changes at year-end. Big projects and deployments are being planned for the months ahead, but they haven’t rolled out yet.

While things are stable, test. Validate that your recovery capability reflects your current environment before the next wave of changes begins. It’s easier to establish a clean baseline now than to chase it later.

If your last DR test was more than a few months ago, or if significant changes have happened since, Q1 is your opportunity. Don’t wait for the calendar to tell you it’s time. Your infrastructure already has.

FAQ

Q: How often should we test our disaster recovery plan?

A: Testing frequency should be tied to your rate of change, not a fixed calendar schedule. At minimum, critical systems should be validated quarterly, with additional tests triggered by significant infrastructure, application, or personnel changes.

Q: Does DR testing require shutting down production systems?

A: No. Testing can range from documentation reviews and checklist validation to targeted component tests. Full failover exercises are valuable but not the only form of valid testing. Many tests can run alongside normal operations.

Q: What changes should trigger a DR test?

A: Infrastructure changes (servers, storage, network), application changes (major deployments, upgrades), location changes (data center moves, cloud migrations), personnel changes (new recovery staff), and vendor changes (backup software, DR providers) should all prompt a review or test.

Q: What’s the risk of not testing regularly?

A: Untested plans fail when needed most. 96% of ransomware attacks target backups, and 63% of organizations risk reintroducing infections during restoration due to skipped validation steps. Recovery can take weeks or fail entirely.

Q: What does a mature DR testing program look like?

A: Mature programs track RTO/RPO trends over time, update runbooks after every test, conduct post-test debriefs, tie testing to infrastructure changes, and train new staff on recovery procedures as part of onboarding.